Storage is cheap and it is used as leverage for building many high performance system. If data is immutable then it is safe to share and maintain multiple copy of data for various access pattern.

Many old design ideas like append-only logs, copy of write , Log structure merge tree, materialized views, replication etc is getting popular due to affordable storage.

In software we have seen many example where immutability is key design decision like Spark is based on immutable RDDs, many key value store like leveldb/rocksdb/hbase is based on immutable storage table, column databases casandra are also taking advantage , HDFS is fully based on immutable file chunk/block.

It is interesting to see that our hardware friends are also using immutability.

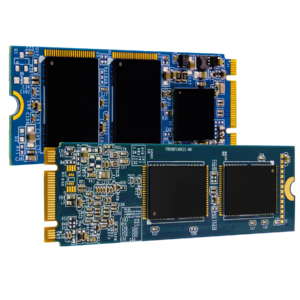

Solid State Drive(SSD) is broken in physical blocks and each block supports finite number of writes, each write operation cause some wear and tear to block. Chip designer use feature of wearing level to evenly distribute write load on each block. Disk controller tracks no of write each block has gone through using non-volatile memory.

Wearing level is based copy-on-write pattern which is flavor of immutability.

Disk maintains logical address space that maps to physical block, the block of disk that stores logical address to physical block mappings supports more write operation as compared to normal data block.

Each write operation whether it is new or update is written at new place in circular fashion to even out writes. This also helps in giving write guarantee when power failure happens.

SSD can be seen like small distributed file system that is made of name node(logical address) and data nodes(physical block).

One question that might come to your mind is that what happens to blocks that never changes ? are they not used to the max level of writes ?

Our hardware friends are very intelligent ! They has come with 2 algorithm

Dynamic wear leveling

Block undergoing re-writing are written to new blocks. This algorithm is not optimal as read only data blocks never gets same volume of write and cause disk to become unusable even though disk can take more writes.

Static wear leveling

This approach try to balance write amplification by selecting block containing static data. This is very important algorithm when software are built around immutable files.

Immutable design are now affordable and key to building successful distributed systems.