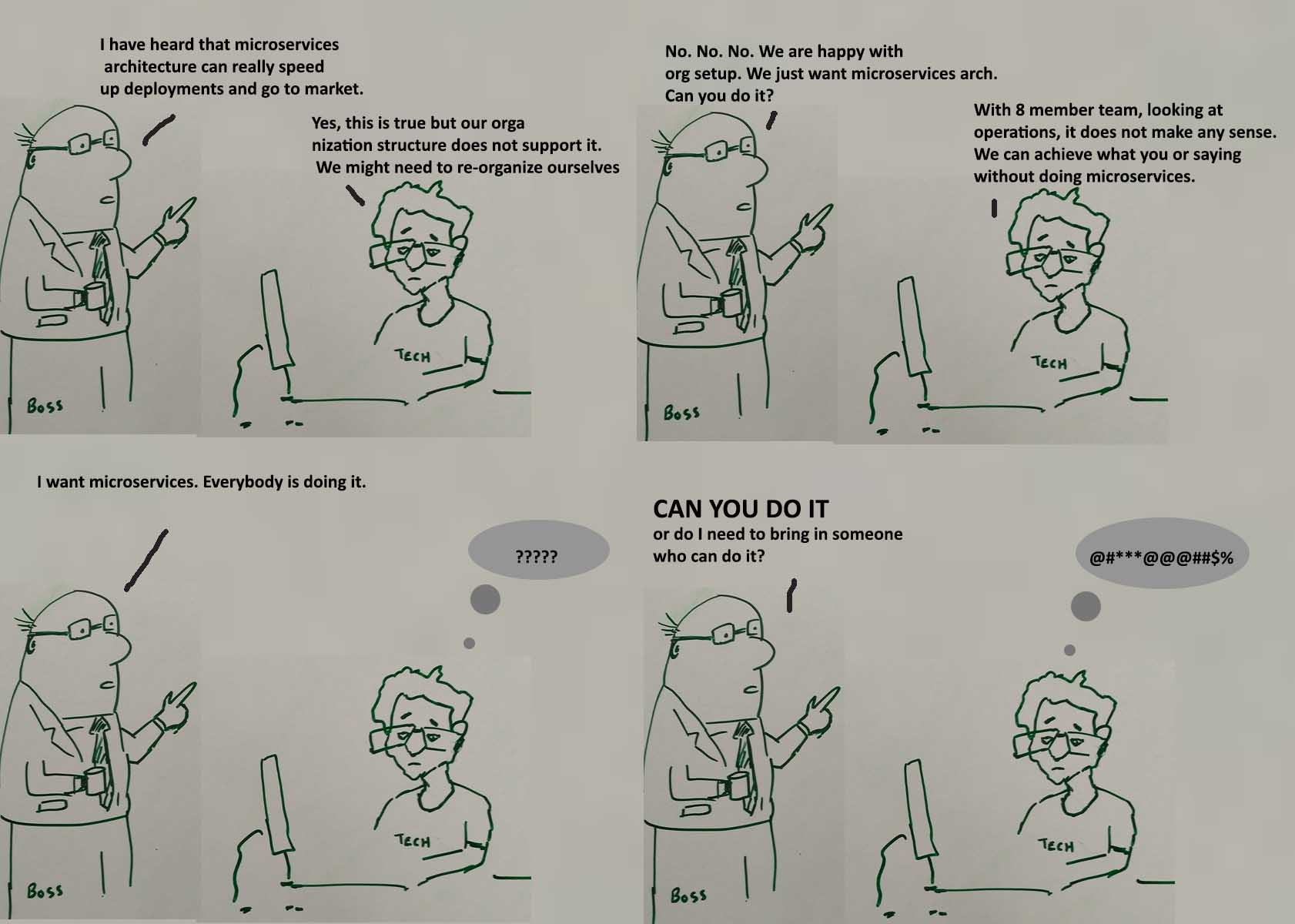

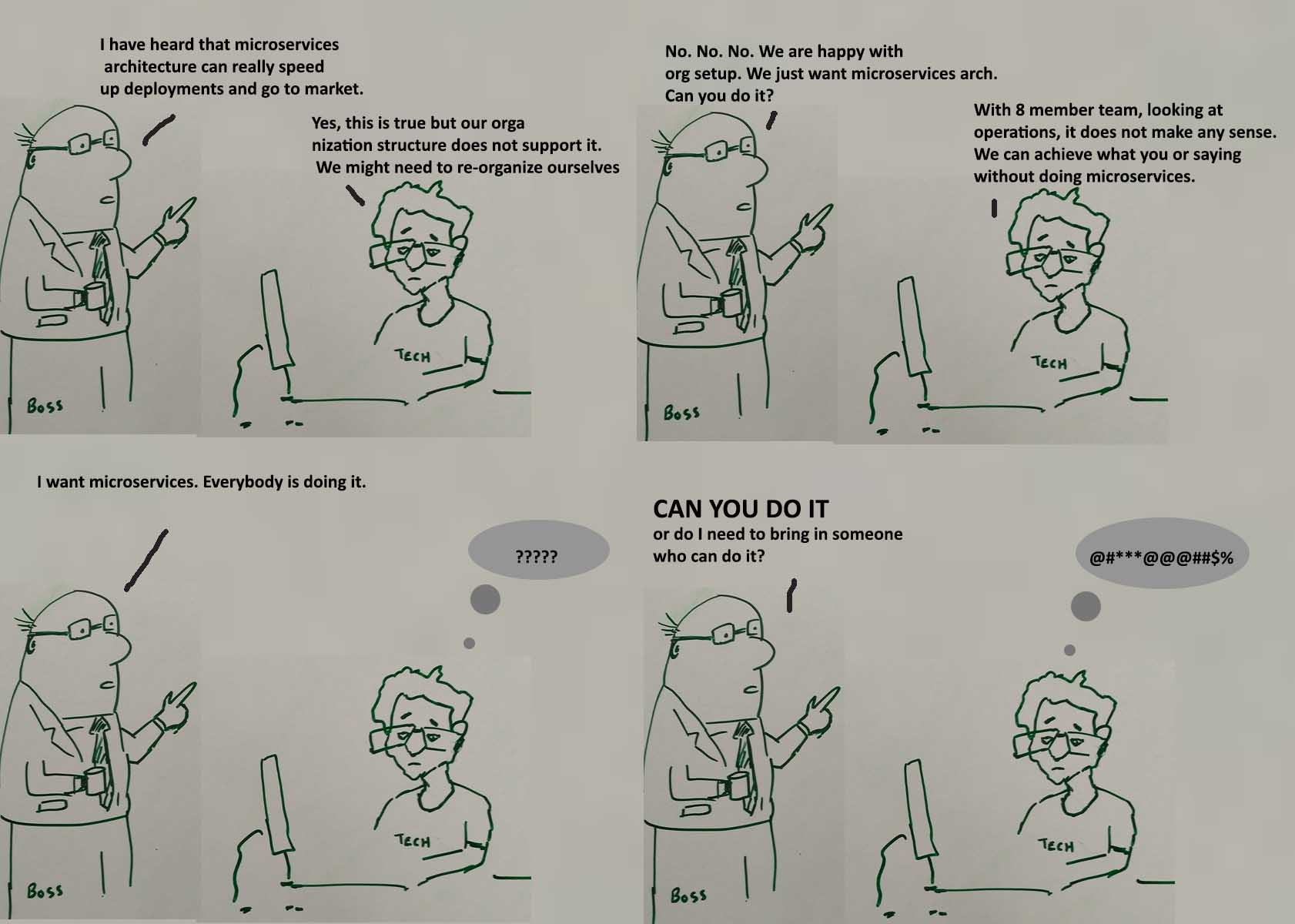

Micro services is great and many company comes and talk about it on how it is used for scaling team, product etc

Microservices has dark side also and as a programmer you should about it before going on ride.

In this post i will share some of the myths/dark side about micro services

For simple investigation you have to login to many machines , looks in logs , make sure you get the timing right on server etc.

Without proper monitoring tools you can't do this, you need ELK or DataDog type of things.

Microservices has dark side also and as a programmer you should about it before going on ride.

In this post i will share some of the myths/dark side about micro services

- We needs lots of micro services

- Naming micro services will be easy

Computer science has only 2 complex problem and one of them is "naming", very soon you will run out of options when you have 100s of them.

- Non functional requirement can be done later

- Polyglot programming/persistence or something poly...

Software engineer likes to try latest cutting edge tool so they get carried away by this myth that we can use any language or any framework or any persistence.

Think about skills and maintenance overhead required for poly.... thing that is added, if you have more than 2/3 things then it is not going to fit in head and you have to be on pager duty.

- Monitoring is easy

For simple investigation you have to login to many machines , looks in logs , make sure you get the timing right on server etc.

Without proper monitoring tools you can't do this, you need ELK or DataDog type of things.

- Read and writes are easy

This thing also get ignored now you are in distributed transaction world and it is not good place to be in and to handle this you need eventual consistent system or non available system.

- Everything is secure

- My service will be always up

- I can add break point to debug it

This is just not possible because now you are in remote process and don't know how many micro services are involved in single request.

- Testing will be same

- No code duplication

- JSON over HTTP

This has resulted in explosion of REST based API for every micro services and is the reason of why many system are slow because they used text based protocol with no type information.

One thing you want to take away from anti pattern of micro services is that rethink that do you really need Json/REST for every service or you can use other optimized protocol and encoding.

- Versioning is my grandfather job

- Team communication remains same.

You can have more silos because no one knows about whole system

- Your product is of google/facebook/netflix scale

If you can't write decent modular monolithic then don't try micro services because it is all about getting correct coupling and cohesion. Modules should be loosely coupled and high cohesive.

No free lunch with micro services and if you get it wrong then you will be paying premium price :-)